Sound Composition

The sound is the music

Composing Sound

In sound-based composition, sound replaces the musical note as the fundamental structural unit.

– Sound is the only form-bearing musical element.

– It is important to understand first the significance of a single sound only, and then its relationship with two or more sounds.

there is no melodic morfomata

– Notes have been abandoned in favor of delicately carved gestures, warm fizzing sounds, forcefully distorted sonorous variants and found objects (instruments) acoustic experimentations.

– The functionality of the diatonic interval, harmony, motive and theme ceases to exist.

– Instead, sound composition reconsiders and re-evaluates those proposing gestures, figures, textures, articulations, postures, symbolisms, and degrees of energy often grouped into a single composite sonic entity.

– The notion of the thematic idea seeks solutions in sound objects with distinctive atmosphere, motion, mood, context and timbre.

– Sound composition may imply to complex behaviors of found objects, sound synthesis, digital processing and instrumental sounds deployed to lead the listener beyond the actual sound itself.

Sound as Texture

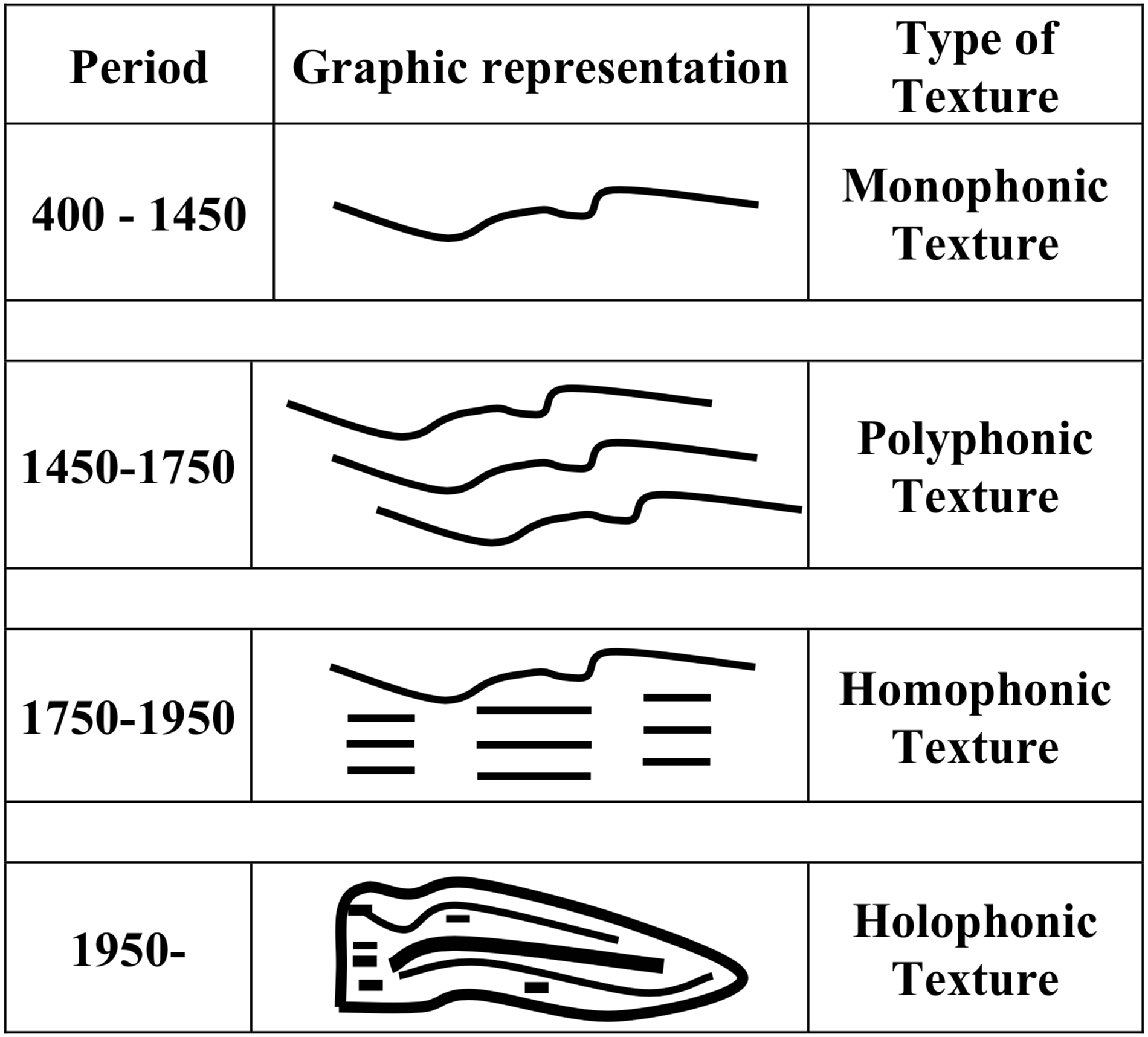

Holophony Musical Texture signifies my intention to determine a rather general aesthetical frame for my work.

Holophonic musical texture is best perceived as the synthesis of simultaneous sound streams into a coherent whole with internal components and focal points.

Holophonic music, is the music whose texture is formed by the fusion of several sound entities which lose their identity and independence in order to contribute to the synthesis of a whole.

The word Holophony is derived from the Greek word holos, which means [whole/ entire], and the word phone, which means [sound/ voice]. In other words, each independent phone (sound) contributes to the synthesis of the holos (whole).

– In a holophonic musical texture you cannot separate the rhythm from the pitch and the timbres.

– A holophonic musical texture is not consisting of parts and cannot be partitioned. It exists as wholeness.

– In particular a holophonic musical texture has one or more of the following characteristics:

.Granularity (rhythm complexity)

.Density

.Timbre similarity (homogeneity)

.Space singularity

.Sound continuity

– it is not only static but gestural and rhythmical.

– Only one Holophonic Texture at a time. (see also monophonic texture, etc.)

– Holophonic Texture is not a background versus foreground

– In holophonic texture is natural to fuse timbres with different registers. The point is to find the right timbres so to create the fusion.

Instrumental Prosthetics

modifications, designs, prosthetics

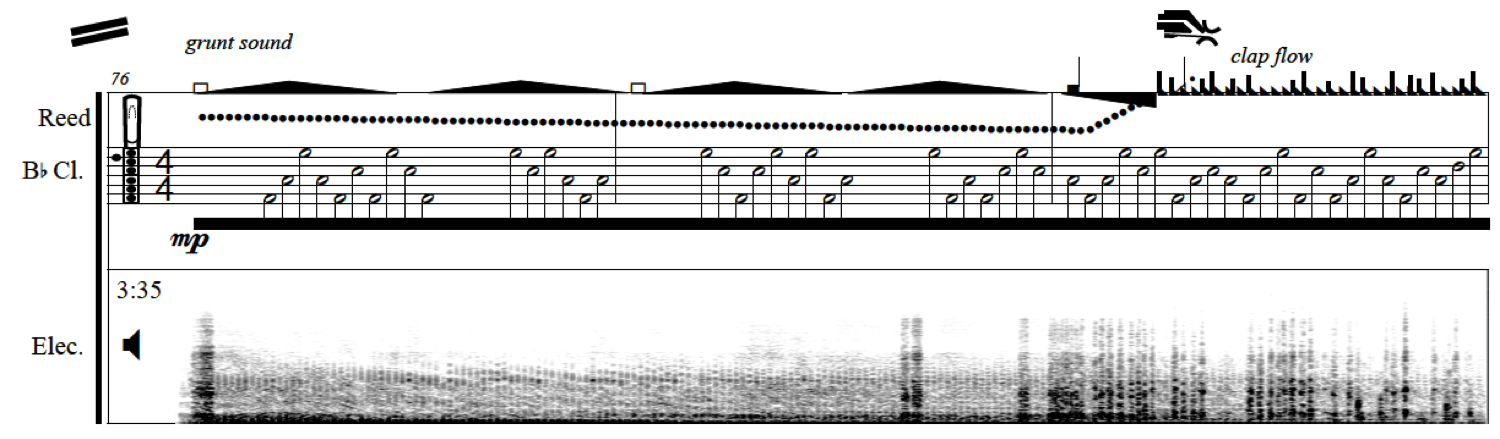

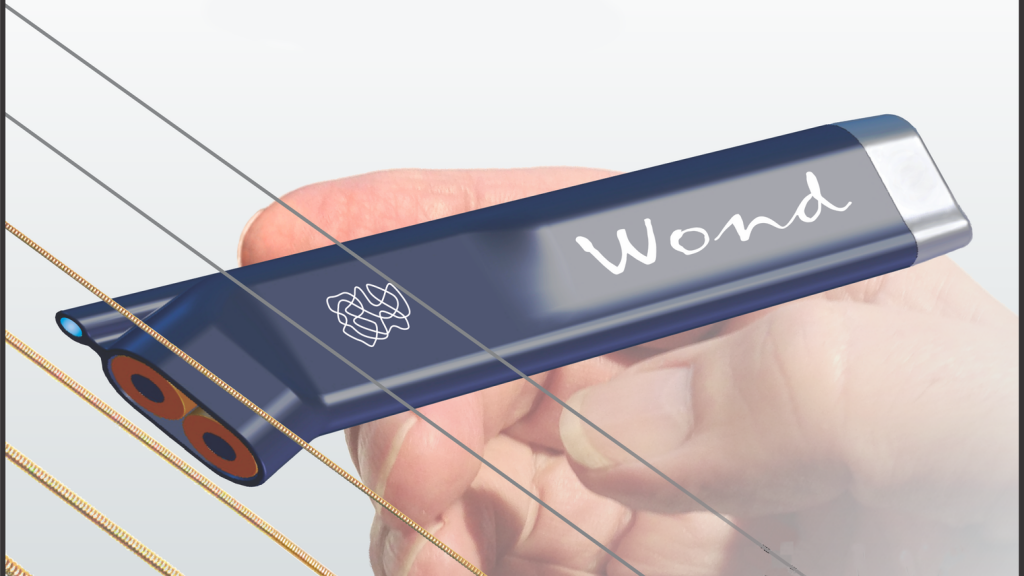

Ultra-thin Synthetic Reed

Ultra-thin Synthetic Reed for clarinet Bb using lasercut, 3D print and casting technologies. It offers new sound possibilites and perormance techniques.

Refer to compositions Mutation, Conscious Sound and Hippo.

Flex Striker

Wooden thin Stick 30-40cm (12 to 15 inches) length; 0.190” (4.80mm, 0.48cm, 3/16) diameter; Cone rounded tip,

The quality of the wood must give strength and flexibility to the stick.

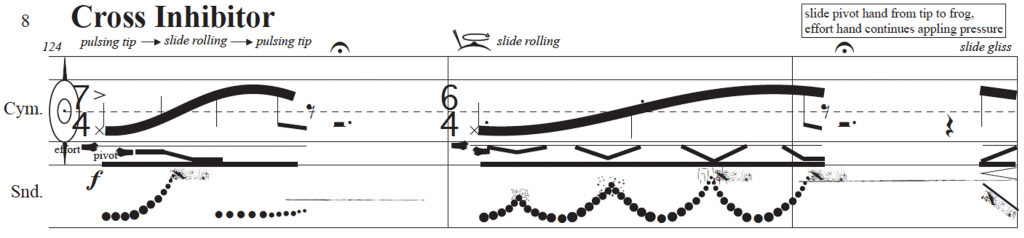

– STICK: The top and bottom section is the stick space of the right and left hand respectively. It displays the position of the of the stick (from tip/thin to base/thick) that hits against the cymbal. The closer to the tip the higher the sound the closer to the base the lower the sound. The medium thickness line indicates the part of the stick that come in contact (strikes) the cymbal. A second line may appear and this one indicates the holding positions of the two hands on the stick (“effort” and “pivot”).

– CYMBAL: The wide space between two lines and occasional a dash line in the middle indicates the play position on the Cymbal. The bottom half from the edge to the bell of the cymbal is towards the performer; the top half, from bell to the top edge is away from the performer. The wavy line indicates duration, position and pressure. The thicker the line the softer the pressure, the thinner the line the more pressure is applied to the stick against the cymbal.

– SOUND: The lower section of the staff provides a visualization of the sound to be produced. The vertical axis represents frequency and the horizontal axis time. Generally, the coloring represents loudness in terms of the frequency from black for the loud frequencies to white for silence. The patterns displayed in this section provide an arbitrary visualization of the sound to be produced such as high/low, bright/dull, ordered/chaotic, coherent/erratic, smooth/coarse, soft/raspy, tonal/noisy, etc. In addition, a number of onomatopoetic and/or echomimetic words/letters aiming to represent or imitate a sound or its context such as aggressive, peaceful, mournful cry of pain, mental and physical suffering, sorrow or pleasure.

Holophony Ensemble Project

The Ensemble Holophony Project is an ongoing project.

It has started since 2003 with the recording of the piece Holophony for amplified string quartet. Initially, it was a rather personal project but recently it has been expanded and opened to the community.

The main aims of the project are to:

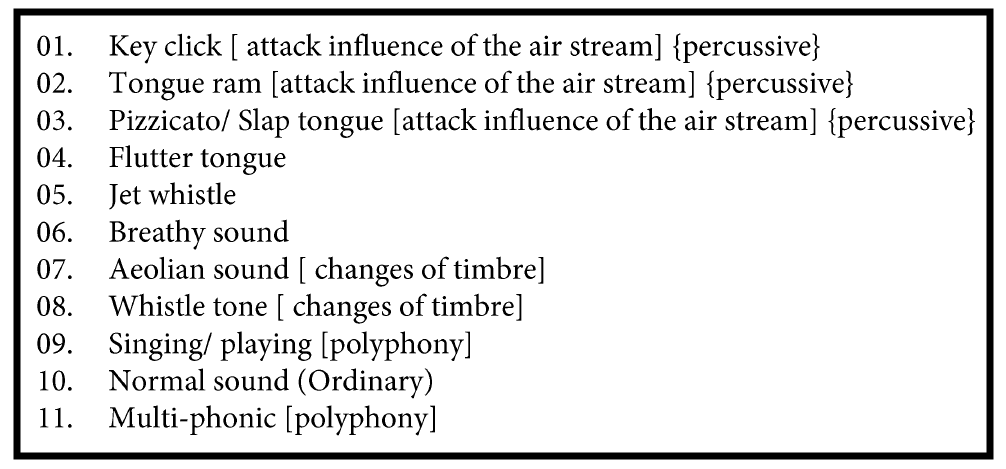

– collect and promote on its website, compositions that use sound as the main form element and challenge the traditional instrumental performance practice searching for new sound possibilities.

– play, catalogue and record sound based compositions.

– bring together performers who have the interest of making sound in time and not playing notes in tempo.

– create a lexicon of new instrumental timbre techniques documented with video demonstrations, written explanations and/or notational examples.

–examble–

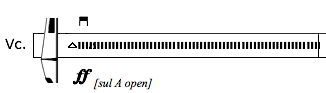

Cello Instructions

The first string should be very loose almost at a point where there is no pitch when plucked. Please listen the reference sound to adjust the appropriate tension.

ARCO CLANG

Triangle note head followed by a thick dashed line. Hold down and bow slowly with some pressure at the indicated area.

The two lines of the staff indicate the bridge at the bottom and the beginning of the fingerboard at the top. The space in-between indicates the distance from bridge to Fingerboard. The left hand most of the time will either hold the instrument or mute the string.

The sound obtained better at the frog of the arco bow. The further from the frog of the bow the less “clangy” the sound becomes.For soft dynamics you should not apply extra bow pressure or you might need to apply very little pressure in order to obtain the “clang” quality. The “clangs” can range from regular to eregular.

For more sounds with audio and video demonstrations please click on the link here.

Music and Consciousness

everything we experience is always unbelievably limited in comparison to our imagination, and that is the law of life. That is the meaning. Imagination creates the future. It is the purpose of life to develop spiritual freedom out of what limitation forces upon us, and to inwardly create new worlds. Someday they will come into existence, if they have been imagined clearly enough. But the imagination always races ahead.

― Stockhausen: Phantasie schafft Zukunft, August 14th 1996)

What makes us feel drawn to music is that our whole being is music: our mind and body, the nature in which we live, the nature which has made us, all that is beneath and around us, it is all music.

― Indian Sufi master Hazrat Inayat Khan (1882-1927)

SENSE – Holistic Listening Experience

This project describes the construction of a system for tactile sound using tactile transducers and explores the compositional potential for a system of these characteristics.

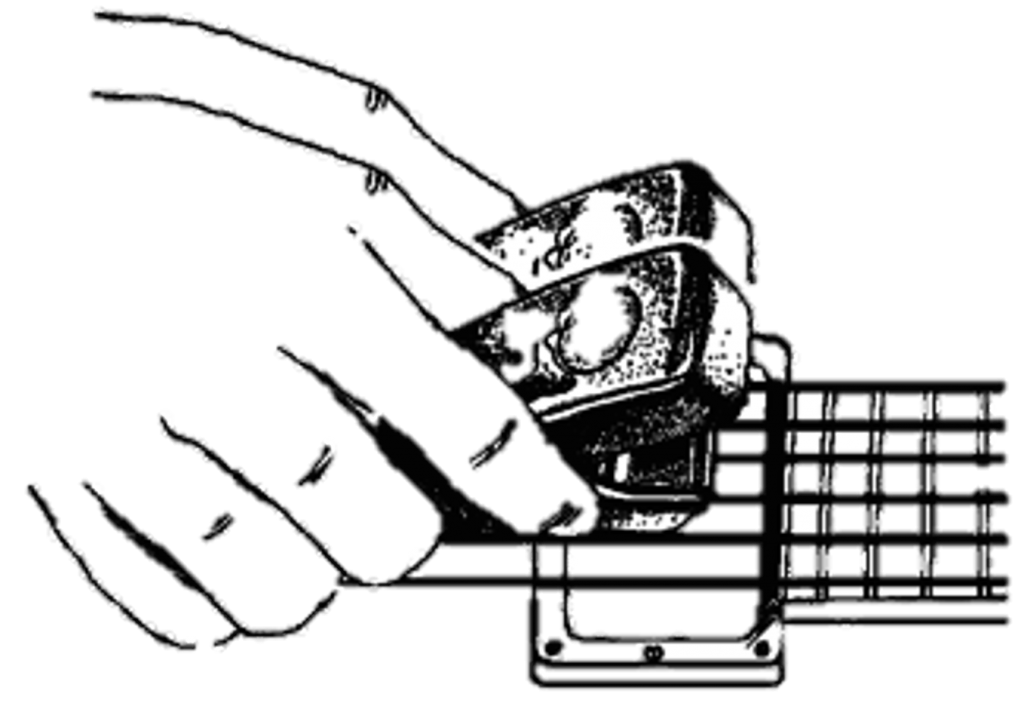

When a classical guitar player plays the guitar besides the strings vibrates the whole body of the guitar. Those vibrations are also sensed via the body of the performer in the form of vibrotactile stimuli. As the performer plays the guitar, he or she feels the vibrations through the chest, foot, fingers and sometimes through the jaw or zygomatic bone when one leans his or her head on the top of the guitar side. The last posture is not part of the standard classical guitar technique, but guitarists, including myself, have done that while practicing. Most of the instrumental players will have a similar experience, depending on the acoustic and physical characteristics of the instruments they play. However, what a performer experiences during his or her recital is something totally different from what the audiences perceives because the only channel of communication between the sound of the guitar and the audience is their ears. In other words, they are missing all the tactility of the sound, which is not possible to transmit via air.

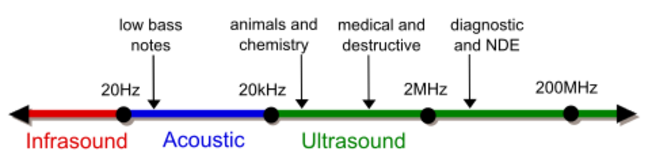

The purpose of the project was to create a holistic listening experience where the audible listening experience is combined with the tactile experiences ultra and infrasound.

For more information visit the Texts page.

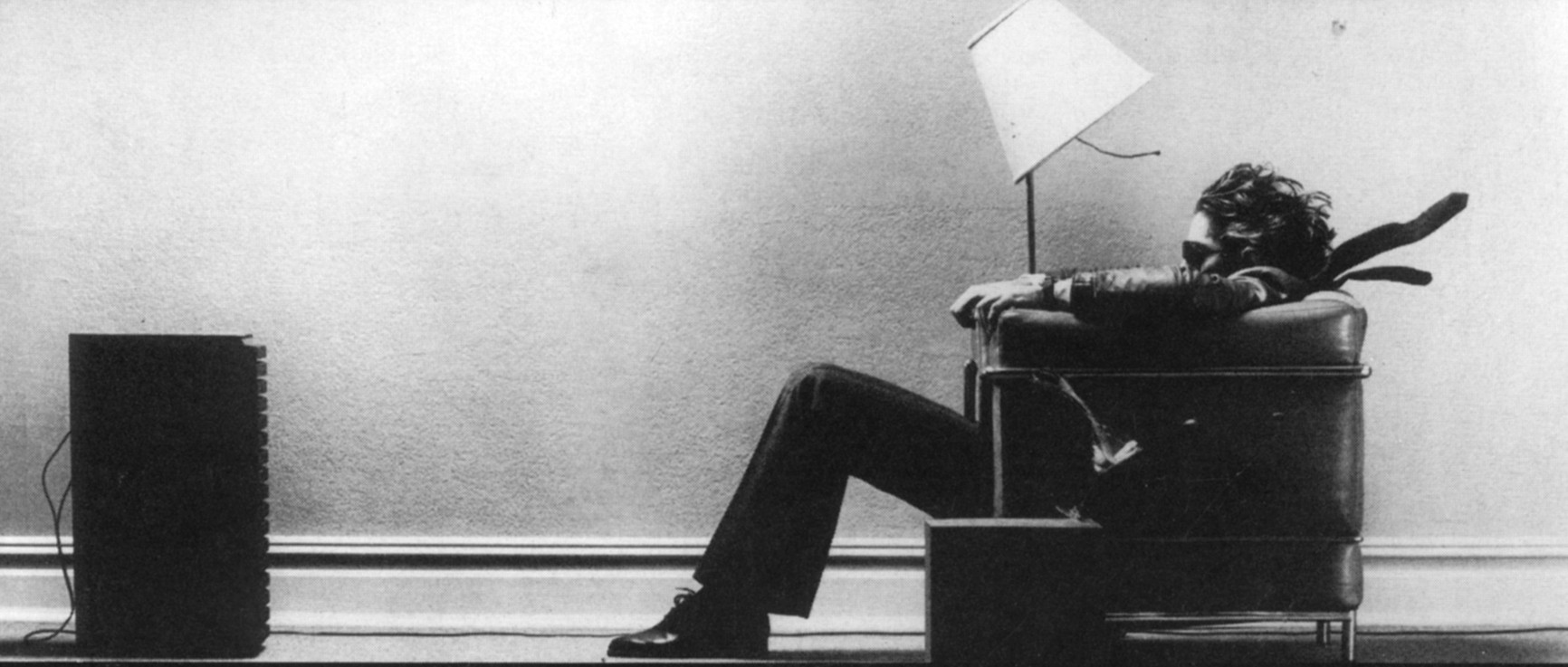

Photograph by Steven Steigman (1978), known as the Blown-away Man, for a Hitachi Maxell ad. The ad shows a man being blown back by the tremendous sound from the speakers in front of him. The photo was instantly a hit; it was a powerful statement that sound has power and force you can feel. Right image, Wilder Penfield’s Sensory homunculus.

The Sense project converts these extreme frequencies into tactile stimuli received from the body. In Sense, the tactile stimuli are specifically composed in a musical context and aligned, or not, with the audible part extending our listening experience far beyond our traditional confines. As a result, inaudible soundscapes are revealed that have not been heard before, let alone, in a musical context. As a result, the listening experience is a composition of both audible musical sounds as well as structured tactile energy, feeling direct throughout the body

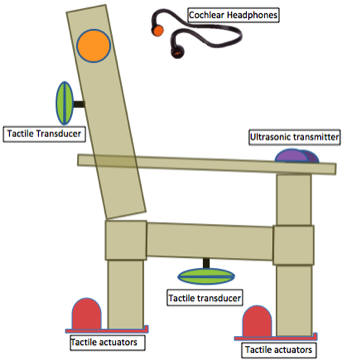

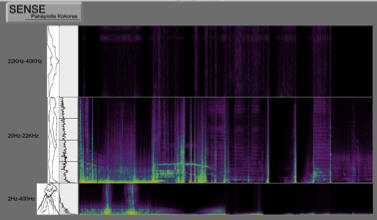

In order to test and explore the above compositional concepts, I have developed a simple interface with emphasis to tactile properties of the sound. This interface integrates into a customized chair with various types of tactile linear actuators and transducers. Especially for the very high frequencies, above the human hearing range, I use a number of ultrasonic transducers. They generate a strong pressure field that can be sensed by the skin as tactile vibrations. The pressure field resembles the frequency structure and rhythmic characteristics of a part of the composition that is not audible otherwise. Ciglar [1] says, “the hypersonic audio signal is directly mapped onto the tactile domain. As a consequence of soundwave propagation through air, an interesting side effect occurs.”

The customized chair consists of one tactile actuator transducer in the front and one in the back, one tactile transducer on the seat and one at the back, one ultrasonic transmitter for the left palm and one for the right, respectively. In addition, there is a 5.1 system that surrounds the chair and a pair of cochlear headphones. The cochlear headphones transmit the sound through bone conduction directly to the inner ear. For the headphones audio, a stereo reduction of the 5.1 versions has been realized.

Part of the goal was to use only audio signal to compose and control the piece. Thus, the whole project has been developed using a standard DAW at 192kHz-sampling rate and 24-bit depth. The first six tracks used only the human frequency range. The Cochlear 1 and 2 channels used a stereo reduction of the 5.1, covering the same frequency range but projected by the cochlear headphones and sensed via bone conduction. Both of the pathways play the same music. The advantage of this system is that it provides more depth to the listening experience because the listener hears at the same time via bone conduction and air transmission.

The other two tracks (ultrasound) drive the two custom-made Ultrasound transducers. The audio material is specially composed to deliver tactile sensation to the palms of the listener. The output of the tracks routed to an amplitude modulation effect with center frequency at 40kHz [3]. That step was necessary to make possible the tactile sensation on the palms from the ultrasonic transducer. The rest, four tracks, were playing frequencies below 20Hz or up to 500Hz in some cases. Similarly, the tracks were specially composed not to be heard but to be felt as tactile vibrations.

MIR Composition Tools and Techniques

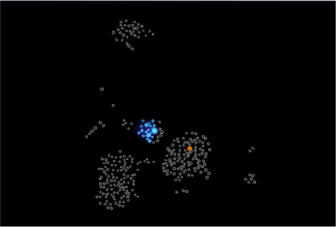

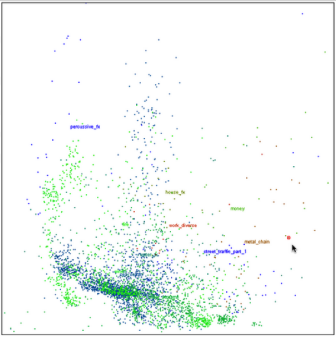

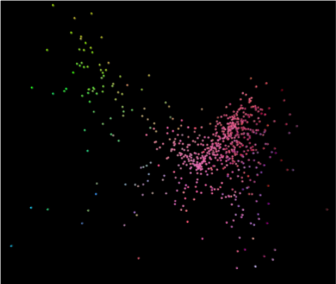

Flute Sound Proximity model

Instrumental Sound proximity models consist of a number of clusters and classes based on current classification methods.

Fab Synthesis

Fabrication Sound Synthesis is a way to organize and systematize, a practice that has been used since the 50s and continues developing till today.

Fab Synthesis refers to a sound synthesis practice in which a sound performer agent effectively applies energy to physical resonator(s) while the resulting acoustic signal is recorded by conventional audio recording means.

First Mode Fab Synthesis – motor skills (gross, fine) –

Use of sound/found objects (resonant chambers, instruments, DIY) played only by hands and/or mouth and/or another passive excitation source (bow, pick, mallet).

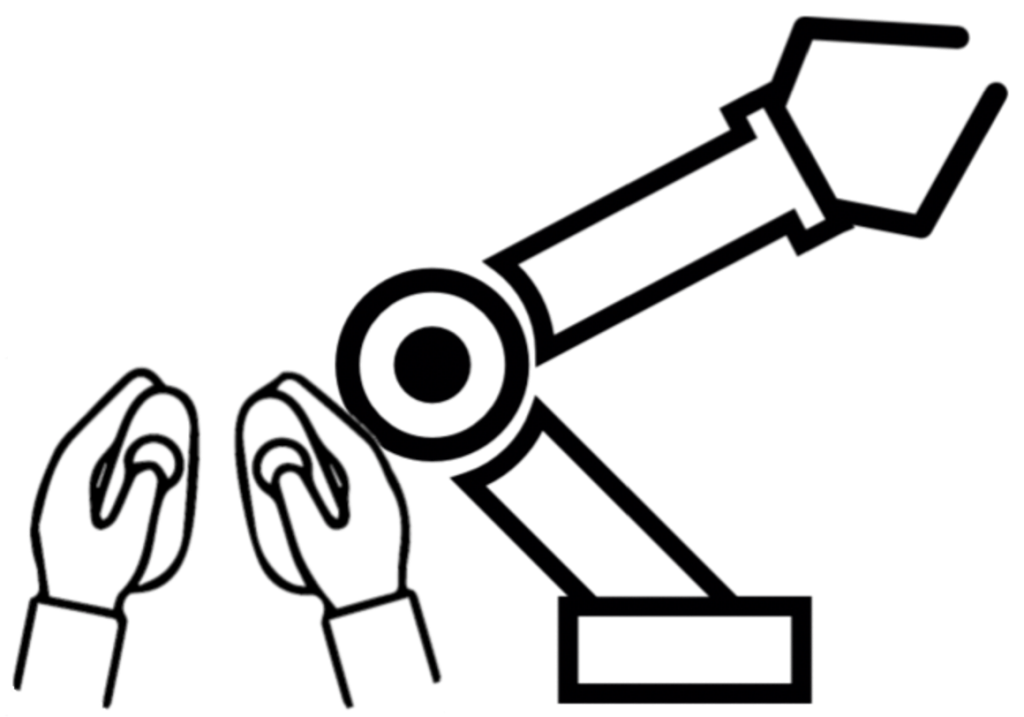

Second Mode Fab Synthesis – prosthetic –

Use of mechatronics controlled by hand and played on the instruments.

Third Mode Fab Synthesis – cyborg –

Use of mechatronics operated via controllers by hand in real- time played on the instrument.

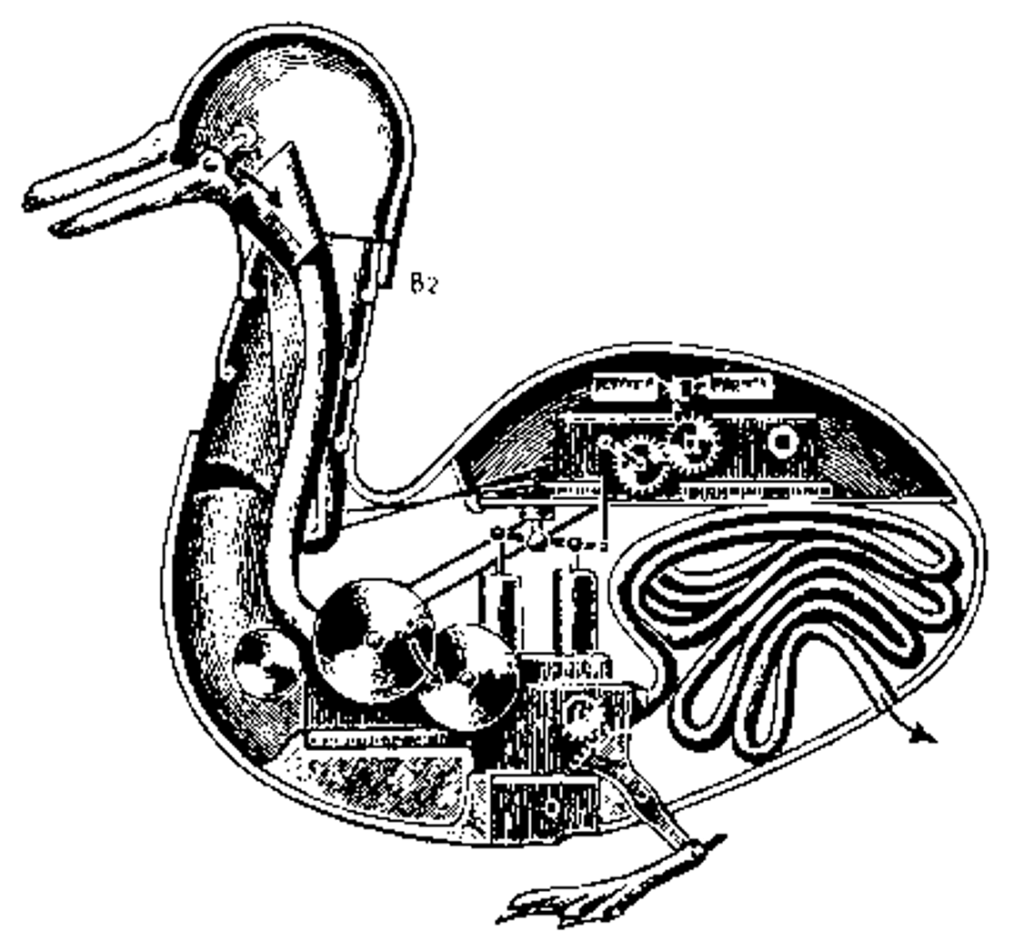

Fourth Mode Fab Synthesis – algorithmic –

Use of mechatronics alone to autonomously (e.g. programmed, AI, automaton) play the instrument. There is no human intervention during the sound performance.

The second mode facilitates the synergy of both human and electro-mechanical agents to co-manipulate the sound. The main characteristic of this mode is the use of mechanical or electromechanical devices and sensors (vibrators, solenoids, motors, cranks, etc.) controlled by hand and played on the instrument. The excitation and modulation gestures are triggered by either or both agents.

Fab Synthesis, 2nd mode: circular straw pan flute, turntable, air compressor and airbrush

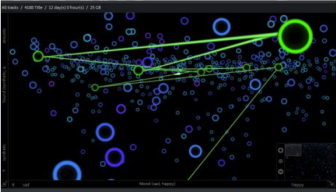

MAX Patches

a collection of MAX patches you might find usefull

Max interface for KORG nanoKONTROL (click on the image to downalod the patch)

You can send midi messages from nano to max and send midi messages from max to any daw (live, logic, cubase, etc)

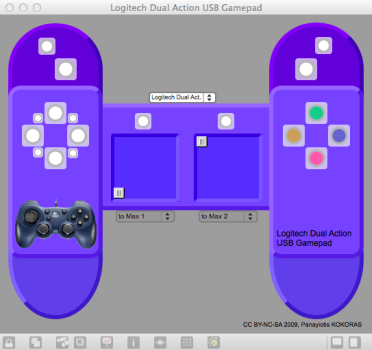

Max interface for Logitech Dual Action USB Gamepad (click on the image to downalod the patch)

Converts the Gamepad data into Midi for use with VST instruments and any DAW such as Ableton Live, Cubase, Logic. It sends noteout or ctlout data. Hold down any button to sustain the sound.

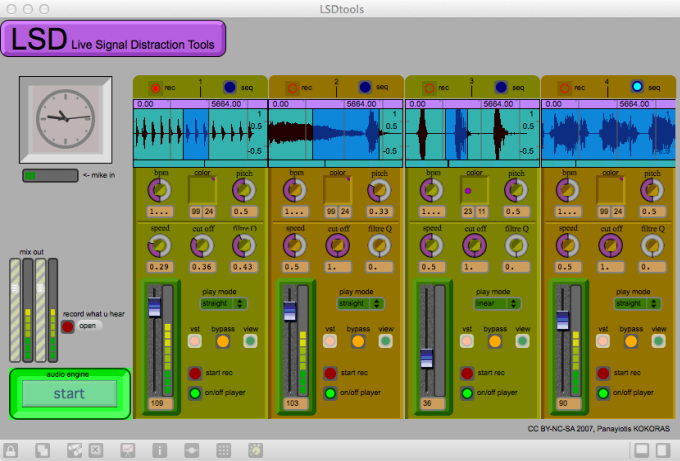

Live Signal Distraction Tools four-track live performance enviroment (click on the image to downoad the patch, video demo click here)

LSDtools is a four-track patch designed to process audio files and live sound in real time. Some of its features are pitch shift, filter with cut off and resonance, distortion, various types of playback, external vst effects, and step sequencer functions.